Tableau Conference 2025 | Know Before You Go

If you’re a data enthusiast, analytics professional, or just someone curious about Tableau’s latest innovations, the Tableau Conference 2025 is your...

3 min read

Mahzabin Khan

Apr 7, 2021

Mahzabin Khan

Apr 7, 2021

The very first step after building a linear regression model is to check whether your model meets the assumptions of linear regression. These assumptions are a vital part of assessing whether the model is correctly specified. In this blog I will go over what the assumptions of linear regression are and how to test if they are met using R.

Let’s get started!

There are primarily five assumptions of linear regression. They are:

In this section I will show you how to test each of the assumptions in R. I am using R studio version 1.4.1103. Also, prior to testing the assumptions, you must have a model built out.

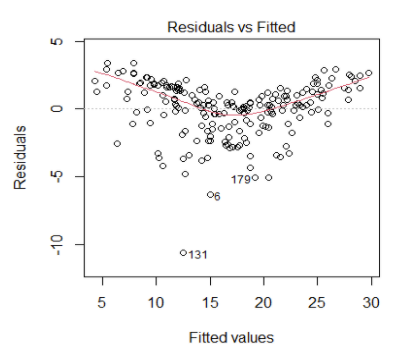

We can check the linearity of the data by looking at the Residual vs Fitted plot. Ideally, this plot would not have a pattern where the red line (lowes smoother) is approximately horizontal at zero.

Here is the code: plot(model name, 1)

This is what we want to see:

.png?width=512&height=337&name=unnamed%20(13).png)

This is what we don’t want to see:

In the above plot, we can see that there is a clear pattern in the residual plot. This would indicate that we failed to meet the assumption that there is a linear relationship between the predictors and the outcome variable.

The easiest way to check the assumption of independence is using the Durbin-Watson test. We can conduct this test using R’s built-in function called durbinWatsonTest on our model. Running this test will give you an output with a p-value, which will help you determine whether the assumption is met or not.

Here is the code: durbinWatsonTest(model name)

The null hypothesis states that the errors are not auto-correlated with themselves (they are independent). Thus, if we achieve a p-value > 0.05, we would fail to reject the null hypothesis. This would give us enough evidence to state that our independence assumption is met!

We can easily check this assumption by looking at the same residual vs fitted plot. We would ideally want to see the red line flat on 0, which would indicate that the residual errors have a mean value of zero.

.png?width=512&height=291&name=unnamed%20(14).png)

In the above plot, we can see that the red line is above 0 for low fitted values and high fitted values. This indicates that the residual errors don’t always have a mean value of 0.

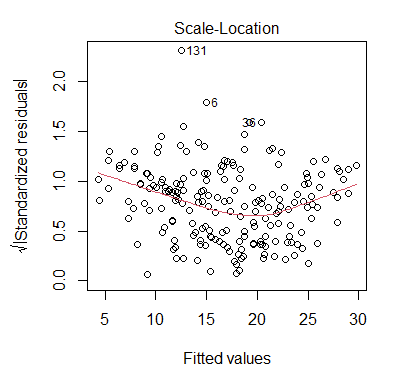

We can check this assumption using the Scale-Location plot. In this plot we can see the fitted values vs the square root of the standardized residuals. Ideally, we would want to see the residual points equally spread around the red line, which would indicate constant variance.

Here is the code: plot(model name, 3)

This is what we want to see:

.png?width=512&height=328&name=unnamed%20(15).png)

This is what we don't want to see:

In the above plot, we can see that the residual points are not all equally spread out. Thus, this assumption is not met. One common solution to this problem is to calculate the log or square root transformation of the outcome variable.

We can also use the Non-Constant Error Variance (NVC) Test using R’s built in function called nvcTest to check this assumption. Make sure you install the package car prior to running the nvc test.

Here is the code: nvcTest(model name)

This will output a p-value which will help you determine whether your model follows the assumption or not. The null hypothesis states that there is constant variance. Thus, if you get a p-value> 0.05, you would fail to reject the null. This means you have enough evidence to state that your assumption is met!

This assumption requires knowledge of study design or data collection in order to establish the validity of this assumption, so we will not be covering this in this blog.

And there you have it!

While this is only a short list, these are my preferred ways to check linear assumptions! I hope this blog answered some of your questions and helped you in your modeling journey!

-2.gif)

If you’re a data enthusiast, analytics professional, or just someone curious about Tableau’s latest innovations, the Tableau Conference 2025 is your...

Tableau Plus is the new premium offering from Tableau, a leading data visualization and business intelligence platform. It builds upon the...

If you've spent any time working with Tableau, you've likely encountered the dreaded "Cannot Mix Aggregate and Non-Aggregate Arguments" error. It's a...